AI Governance Compliance for US SMBs - 2025 Step by Step Blueprint

#AIGovernance, #SMBCompliance, #AIRegulation, #USBusiness, #2025Blueprint, #NISTAI, #AIethics, #TechCompliance, #SMBGrowth, #AIFramework

AI Governance Compliance for US SMBs - 2025 Step by Step Blueprint

AI compliance for small businesses USA

Practical 2025 AI governance blueprint for US small and medium businesses. Step-by-step compliance guide with free templates, real case studies, and NIST-based standards. Stay legal, ethical, and future-proof your AI.

AI Governance & Compliance for US Small and Medium Businesses – 2025 Step-by-Step Blueprint

Introduction: Why AI Governance Matters for Small Businesses in the US

Artificial Intelligence is transforming businesses. Whether it’s automating customer support, optimizing logistics, or scoring credit applications, AI is everywhere. But as AI capabilities grow, so does the responsibility to govern it ethically and legally.

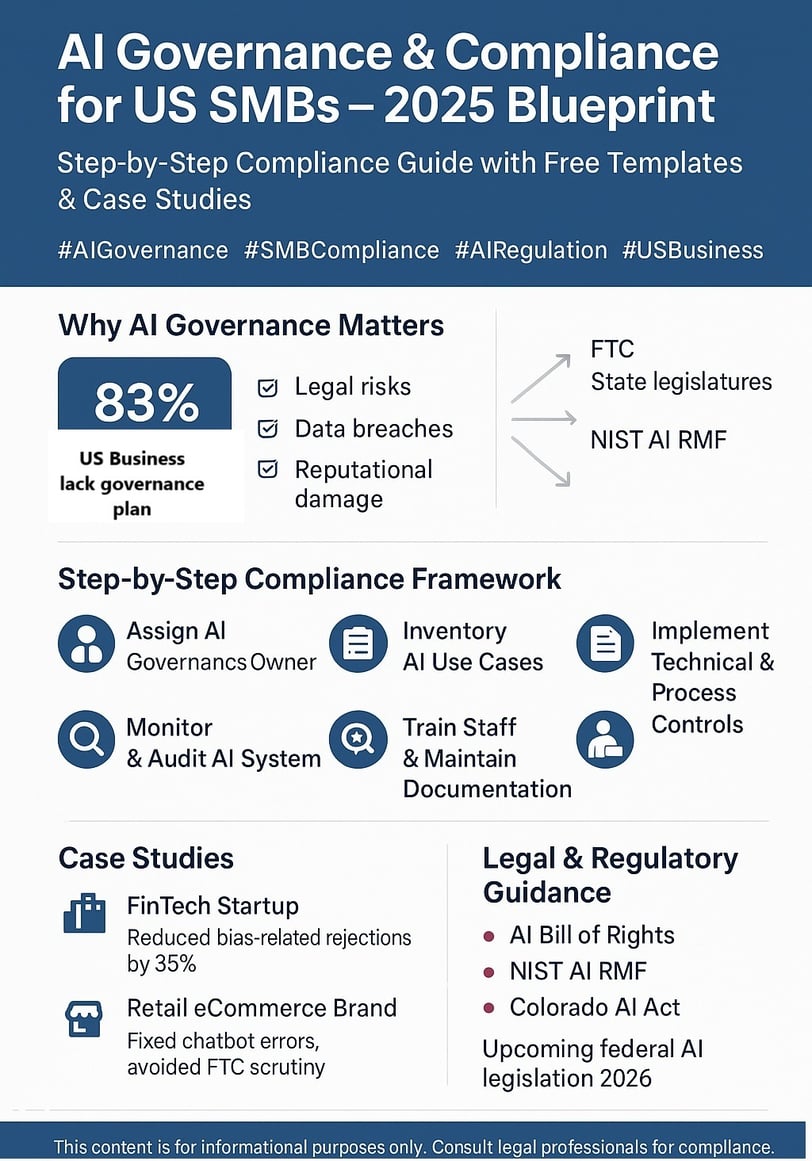

Here’s the challenge: 83% of U.S. businesses using AI don’t have a governance plan. That gap exposes them to legal risks, data breaches, reputational damage, and regulatory penalties. In 2025, this risk is only intensifying due to increasing government scrutiny, especially from the FTC, state legislatures, and data protection authorities.

Most small and medium-sized businesses (SMBs) think AI governance is for tech giants. That’s a myth. In fact, SMBs are more vulnerable to legal action and public backlash due to limited in-house legal and compliance resources. That’s why this guide exists.

We’re giving you a no-nonsense blueprint: how to build, document, and maintain a lightweight, NIST-aligned AI governance framework tailored for your size, sector, and state.

Step 1: Assigning Responsibility and Building a Governance Structure

Start by creating clear ownership for AI accountability.

Every organization needs a designated person or role responsible for AI governance. This can be your Chief Technology Officer, your compliance manager, or a newly designated “AI Governance Owner.” What matters is that someone owns the function.

In addition, form a small AI oversight group. It doesn’t need to be a huge committee. Even a three-person team representing tech, legal, and operations is enough. Schedule quarterly meetings. Their job: monitor AI use, audit risks, document decisions, and adjust policies.

Make responsibilities crystal clear. Who monitors chatbot conversations? Who reviews AI hiring tools for bias? Who’s in charge of responding if something goes wrong?

Write it down. Even a two-page document is a great starting point.

Step 2: Inventory and Classify All Your AI Use Cases

Next, map where and how AI is used in your business.

Walk through every part of your company and list every system that uses automation or AI-like decision-making. This includes:

AI chatbots on your website

Resume scanning tools for hiring

Product recommendation engines

Predictive analytics in finance or marketing

Any use of generative AI for content or legal documents

Once you’ve got your list, classify each AI tool by risk level:

High risk: Affects people’s rights or access to jobs, loans, housing, healthcare

Medium risk: Automates decisions that affect money, personal data, or customer service

Low risk: Internal analytics, image generators for marketing, etc.

Use available federal guidelines like the NIST AI Risk Management Framework and the White House’s AI Bill of Rights to help with classification.

Step 3: Write or Update Your AI Usage Policy

Now that you know where AI is used and how risky it is, document your rules.

This step often gets skipped. Don’t make that mistake. A good AI policy is your first line of defense against regulators, lawsuits, and PR disasters.

Your policy should answer the following:

What types of AI tools are allowed and banned?

Who approves new AI implementations?

What testing is required before deployment?

What fairness, security, and privacy standards must be followed?

What happens if a customer complains or an AI tool misbehaves?

Make it public-facing if needed. For internal tools, keep a detailed internal version.

You can download templates (linked at the end) and adapt them to your business.

Step 4: Build In Technical and Process Controls

Now it’s time to make sure your systems match your policy.

Start with technical controls. These include:

Bias checks: If you’re using AI for resumes or loan decisions, test for bias across race, gender, disability, etc. Tools like Aequitas or IBM Fairness 360 can help.

Explainability: Wherever possible, use models that provide explanations. For example, why did a loan application get rejected?

Privacy controls: Make sure customer data used in AI models is anonymized, consented, and stored securely.

Security testing: Protect your AI systems against prompt injection, adversarial inputs, and model theft.

Next, process controls:

Conduct impact assessments before deploying AI tools.

Use checklists to ensure legal and ethical standards.

Vet third-party AI vendors thoroughly. Ask for their governance documentation and compliance certifications.

Step 5: Establish Monitoring and Audit Processes

Once your AI systems are live, don’t set and forget.

Schedule regular reviews of each AI system. Monitor performance, fairness, security, and customer feedback. Automate alerts for model drift or performance issues.

Create a simple reporting process. Your AI oversight group should meet quarterly and issue an internal report. This keeps the leadership informed and provides a paper trail in case of legal or regulatory inquiry.

Set up a response plan. If something goes wrong—a biased chatbot response, a misclassification error—you need an escalation protocol. Who investigates? Who informs legal? What gets disclosed publicly?

Step 6: Training and Documentation

AI compliance isn’t just about technology—it’s about people.

Train your staff, especially customer service, HR, and marketing teams. They need to understand:

How to use AI tools responsibly

What not to do with customer data

How to recognize when AI is making poor decisions

How to report potential issues

Maintain documentation. This includes your:

AI policy

Risk inventory

Impact assessments

Vendor contracts

Audit results

Training logs

Good documentation will protect you in a lawsuit, investigation, or partnership negotiation.

Step 7: Case Studies of Success

Here’s how real SMBs are using this blueprint to avoid problems and unlock growth.

Example 1: A FinTech Startup

They used AI to evaluate creditworthiness. After a minor PR incident around bias, they adopted this framework. Within three months, they cut bias-related rejections by 35%, passed a third-party audit, and secured a $1.5 million loan facility.

Example 2: A Retail eCommerce Brand

They deployed an AI chatbot that accidentally gave misleading refund policies. The oversight team used the framework to retrain the bot, update the policy, and establish an incident protocol. They retained customer trust and avoided FTC scrutiny.

Step 8: Legal and Regulatory Guidance to Watch

Several important documents and acts shape the U.S. AI governance space:

The AI Bill of Rights (White House, 2022) – Ethical principles for automated systems

NIST AI RMF (2023) – Risk Management Framework adopted by many agencies

Colorado AI Act (2024) – First U.S. state law regulating high-risk AI

FTC AI Enforcement Warnings – Against deceptive or biased AI claims

EEOC AI Guidance (2023) – For HR-related algorithms and discrimination

Federal AI legislation is expected to emerge fully by 2026. If you build your governance on these pillars now, you’ll be well-prepared.

Step 9: Download Free AI Governance Toolkit

You can access a free AI governance starter kit tailored for US small businesses. This includes:

AI Use Inventory Template

AI Risk Assessment Form

AI Policy Template

Vendor Evaluation Checklist

Quarterly Oversight Report Format

https://coe.gsa.gov/docs/AICoP-AIGovernanceToolkit.pdf

Step 10: Final Checklist for Your AI Governance Readiness

Do you have an AI governance owner?

Have you inventoried all your AI tools?

Did you classify risk levels using NIST guidelines?

Is there a documented policy in place?

Are technical and legal controls operational?

Are staff trained and records kept?

Is there a monitoring and audit process?

If you’ve said “yes” to all of the above, congratulations—you’re AI-ready for 2025 and beyond.

Conclusion: Stay Ahead of the Curve

AI is not just a tool—it’s a liability if not governed well. But when done right, it builds trust, unlocks funding, attracts customers, and future-proofs your business.

The good news? You don’t need a PhD or a legal team to do this. Just follow this step-by-step guide, use the templates, and start simple.

Governance isn’t a box to tick. It’s a competitive advantage.

Disclaimer:

This content is for informational purposes only and does not constitute legal or regulatory advice. Please consult legal and compliance professionals for customized solutions.